Today, the realms of business, science, and technology are merging together like never before. The amount of data thus available to us has outstripped our tools for analyzing and using them. This leads to a mountain of unstructured data waiting to be tapped. There’s gold in that mountain! The need is to get the right tool to dig it. For many today, that right tool is called Deep Learning.

Thursday, July 27, 2017

What is deep learning and how does it work?

There’s gold in that mountain! The need is to get the right tool to dig it. For many today, that right tool is called Deep Learning.

Source: http://andrewyuan.github.io/

Source: http://andrewyuan.github.io/

Friday, July 21, 2017

An Introduction to Kafka

Learn the basics of Apache Kafka, an open-source stream processing platform, and learn how to create a general single broker cluster.

In this blog, I am going to get into the details like:

- What is Kafka?

- Getting familiar with Kafka.

- Learning some basics in Kafka.

- Creating a general single broker cluster.

So let’s get started!

What Is Kafka?

In simple terms, Kafka is a messaging system that is designed to be fast, scalable, and durable. It is an open-source stream processing platform. Apache Kafka originated at LinkedIn and later became an open-source Apache project in 2011, then a first-class Apache project in 2012. Kafka is written in Scala and Java. It aims at providing a high-throughput, low-latency platform for handling real-time data feeds.

Getting Familiar With Kafka

Apache describes Kafka as a distributed streaming platform that lets us:

- Publish and subscribe to streams of records.

- Store streams of records in a fault-tolerant way.

- Process streams of records as they occur.

Why Kafka?

In Big Data, an enormous volume of data is used. But how are we going to collect this large volume of data and analyze that data? To overcome this, we need a messaging system. That is why we need Kafka. The functionalities that it provides are well-suited for our requirements, and thus we use Kafka for:

- Building real-time streaming data pipelines that can get data between systems and applications.

- Building real-time streaming applications to react to the stream of data.

What Is a Messaging System?

A messaging system is a system that is used for transferring data from one application to another so that the applications can focus on data and not on how to share it. Kafka is a distributed publish-subscribe messaging system. In a publish-subscribe system, messages are persisted in a topic. Message producers are called publishers and message consumers are called subscribers. Consumers can subscribe to one or more topic and consume all the messages in that topic (we will discuss these terminologies later in the post).

Benefits of Kafka

Four main benefits of Kafka are:

- Reliability. Kafka is distributed, partitioned, replicated, and fault tolerant. Kafka replicates data and is able to support multiple subscribers. Additionally, it automatically balances consumers in the event of failure.

- Scalability. Kafka is a distributed system that scales quickly and easily without incurring any downtime.

- Durability. Kafka uses a distributed commit log, which means messages persists on disk as fast as possible providing intra-cluster replication, hence it is durable.

- Performance. Kafka has high throughput for both publishing and subscribing messages. It maintains stable performance even when dealing with many terabytes of stored messages.

Now, we can move on to our next step: Kafka basics.

Basics of Kafka

Apache.org states that:

- Kafka runs as a cluster on one or more servers.

- The Kafka cluster stores a stream of records in categories called topics.

- Each record consists of a key, a value, and a timestamp.

Topics and Logs

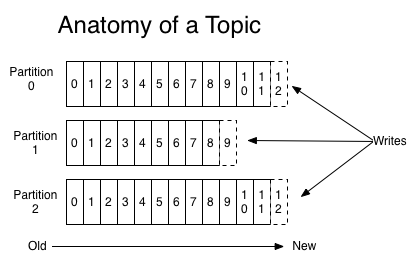

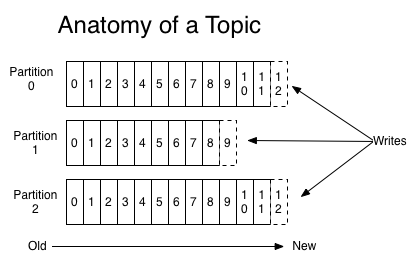

A topic is a feed name or category to which records are published. Topics in Kafka are always multi-subscriber — that is, a topic can have zero, one, or many consumers that subscribe to the data written to it. For each topic, the Kafka cluster maintains a partition log that looks like this:

Partitions

A topic may have many partitions so that it can handle an arbitrary amount of data. In the above diagram, the topic is configured into three partitions (partition{0,1,2}). Partition0 has 13 offsets, Partition1 has 10 offsets, and Partition2 has 13 offsets.

Partition Offset

Each partitioned message has a unique sequence ID called an offset. For example, in Partition1, the offset is marked from 0 to 9.

Replicas

Replicas are nothing but backups of a partition. If the replication factor of the above topic is set to 4, then Kafka will create four identical replicas of each partition and place them in the cluster to make them available for all its operations. Replicas are never used to read or write data. They are used to prevent data loss.

Brokers

Brokers are simple systems responsible for maintaining published data. Kafka brokers are stateless, so they use ZooKeeper for maintaining their cluster state. Each broker may have zero or more partitions per topic. For example, if there are 10 partitions on a topic and 10 brokers, then each broker will have one partition. But if there are 10 partitions and 15 brokers, then the starting 10 brokers will have one partition each and the remaining five won’t have any partition for that particular topic. However, if partitions are 15 but brokers are 10, then brokers would be sharing one or more partitions among them, leading to unequal load distribution among the brokers. Try to avoid this scenario.

Zookeeper

ZooKeeper is used for managing and coordinating Kafka brokers. ZooKeeper is mainly used to notify producers and consumers about the presence of any new broker in the Kafka system or about the failure of any broker in the Kafka system. ZooKeeper notifies the producer and consumer about the presence or failure of a broker based on which producer and consumer makes a decision and starts coordinating their tasks with some other broker.

Cluster

When Kafka has more than one broker, it is called a Kafka cluster. A Kafka cluster can be expanded without downtime. These clusters are used to manage the persistence and replication of message data.

Kafka has four core APIs:

- The Producer API allows an application to publish a stream of records to one or more Kafka topics.

- The Consumer API allows an application to subscribe to one or more topics and process the stream of records produced to them.

- The Streams API allows an application to act as a stream processor, consuming an input stream from one or more topics and producing an output stream to one or more output topics, effectively transforming the input streams to output streams.

- The Connector API allows building and running reusable producers or consumers that connect Kafka topics to existing applications or data systems. For example, a connector to a relational database might capture every change to a table.

Up to now, we've discussed theoretical concepts to get ourselves familiar with Kafka. Now, we will be using some of these concepts in setting up of our single broker cluster.

Creating a General Single Broker Cluster

There instructions to create a general single broker assume that you're using ubuntu16.04 and have Java 1.8 or later installed. If Java is not installed, you can get it here.

1. Download Kafka ZIP File

Download the Kafka 0.10.2.0 release from here and untar it using the following command on your terminal. Then, move to the directory containing our extracted Kafka.

> tar -xzf kafka_2.11-0.10.2.0.tgz

2. Start Kafka Server

Now that we have extracted our Kafka and moved to its parent directory, we can start our Kafka Server by just typing the following in our terminal:

> sudo kafka_2.11-0.10.2.0/bin/kafka-server-start.sh kafka_2.11-0.10.2.0/config/server.properties

Upon successful startup, we can see the following logs on our screen:

If Kafka server doesn’t start, it is possible that your ZooKeeper instance is not running. ZooKeeper should be up as a daemon process by default; we can check that by entering this in the command line:

netstat -ant | grep :2181

If ZooKeeper isn’t up, we can set it up by:

sudo apt -get install zookeeeperd

By default, ZooKeeper is bundled in Ubuntu’s default repository. Once installed, it will start as a daemon process and run on port 2181.

3. Create Your Own Topic (Producer)

Now that we have our Kafka and ZooKeeper instances up and running, we should test them by creating a topic and then consuming and producing data from that topic. We can do so by:

sudo kafka_2.11-0.10.2.0/bin/kafka-console-producer.sh –broker-list localhost:9092 –topic yourTopicName

Your topic (

yourTopicName) will be created as soon as we press the Enter key. It will also work as your producer, and the cursor will wait at the command line for you to input some message, as we can see in the below snapshot.

4. Create the Consumer

Now that our producer is up and running, we need a consumer to consume the messages that our producer produces. This can be done by:

sudo kafka_2.11-0.10.2.0/bin/kafka-console-consumer.sh –zookeeper localhost:2181 –topic yourTopicName –from-beginning

This will fetch all our messages from the beginning that our producer has produced since it was up. We will get something like this:

Now we have both our producer and consumer Kafka instances up and running.

Note: We can create multiple testing topics and then can produce and consume on these testing topics simultaneously.

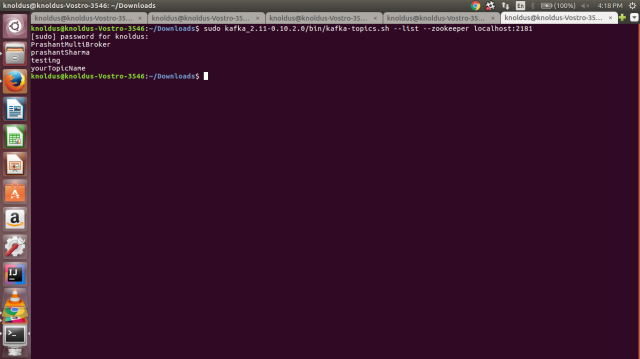

5. List Topics

As there is no upper bound to the number of topics that we can create in Kafka so it would be helpful if we could see the complete list of topics created. This can be done by:

sudo kafka_2.11-0.10.2.0/bin/kafka-topics.sh –list –zookeeper localhost:2181

After we press Enter, we see a list of topics we've created, as shown in the snapshot below:

That is all we need to do for a single broker cluster. Next time, I will be going deeper into Kafka (less theory, more practical) and we’ll be looking at how to set up a multi-broker cluster.

Until then, happy reading!

References

Wednesday, July 19, 2017

Monday, July 3, 2017

25 Artificial Intelligence Terms You Need to Know

Some of the biggest definitions that you need to know when it comes to artificial intelligence.

As artificial intelligence becomes less of an ambiguous marketing buzzword and more of a precise ideology, it's increasingly becoming a challenge to understand all of the AI terms out there. So to kick off the brand new AI Zone, the Editorial Team here at DZone got together to define some of the biggest terms in the world of artificial intelligence for you.

A

Algorithms: A set of rules or instructions given to an AI, neural network, or other machines to help it learn on its own; classification, clustering, recommendation, and regression are four of the most popular types.

Artificial intelligence: A machine’s ability to make decisions and perform tasks that simulate human intelligence and behavior.

Artificial neural network (ANN): A learning model created to act like a human brain that solves tasks that are too difficult for traditional computer systems to solve.

Autonomic computing: A system's capacity for adaptive self-management of its own resources for high-level computing functions without user input.

C

Chatbots: A chat robot (chatbot for short) that is designed to simulate a conversation with human users by communicating through text chats, voice commands, or both. They are a commonly used interface for computer programs that include AI capabilities.

Classification: Classification algorithms let machines assign a category to a data point based on training data.

Cluster analysis: A type of unsupervised learning used for exploratory data analysis to find hidden patterns or grouping in data; clusters are modeled with a measure of similarity defined by metrics such as Euclidean or probabilistic distance.

Clustering: Clustering algorithms let machines group data points or items into groups with similar characteristics.

Cognitive computing: A computerized model that mimics the way the human brain thinks. It involves self-learning through the use of data mining, natural language processing, and pattern recognition.

Convolutional neural network (CNN): A type of neural networks that identifies and makes sense of images.

D

Data mining: The examination of data sets to discover and mine patterns from that data that can be of further use.

Data science: An interdisciplinary field that combines scientific methods, systems, and processes from statistics, information science, and computer science to provide insight into phenomenon via either structured or unstructured data.

Decision tree: A tree and branch-based model used to map decisions and their possible consequences, similar to a flow chart.

Deep learning: The ability for machines to autonomously mimic human thought patterns through artificial neural networks composed of cascading layers of information.

F

Fluent: A type of condition that can change over time.

G

Game AI: A form of AI specific to gaming that uses an algorithm to replace randomness. It is a computational behavior used in non-player characters to generate human-like intelligence and reaction-based actions taken by the player.

K

Knowledge engineering: Focuses on building knowledge-based systems, including all of the scientific, technical, and social aspects of it.

M

Machine intelligence: An umbrella term that encompasses machine learning, deep learning, and classical learning algorithms.

Machine learning: A facet of AI that focuses on algorithms, allowing machines to learn without being programmed and change when exposed to new data.

Machine perception: The ability for a system to receive and interpret data from the outside world similarly to how humans use our senses. This is typically done with attached hardware, though software is also usable.

N

Natural language processing: The ability for a program to recognize human communication as it is meant to be understood.

R

Recurrent neural network (RNN): A type of neural network that makes sense of sequential information and recognizes patterns, and creates outputs based on those calculations.

S

Supervised learning: A type of machine learning in which output datasets train the machine to generate the desired algorithms, like a teacher supervising a student; more common than unsupervised learning.

Swarm behavior: From the perspective of the mathematical modeler, it is an emergent behavior arising from simple rules that are followed by individuals and does not involve any central coordination.

U

Unsupervised learning: A type of machine learning algorithm used to draw inferences from datasets consisting of input data without labeled responses. The most common unsupervised learning method is cluster analysis.

Subscribe to:

Posts (Atom)

Featured Post

How to build Unified Experience Platform (CDP & DXP)

USPA framework What is USPA framework ? The USPA framework is conceptual framework, to develop Unified Experience Platform (the unified of ...

-

A Customer Data Platform (CDP) is a system that collects and stores all your customer data, for analysis and use by marketers....

-

1. Programming Languages Python Tutorial - Python for Beginners https://www.youtube.com/watch?v=_uQrJ0TkZlc Java Tutorial for Beginners ht...